How Law School Scholars Are Using Data to Study Policing

As national concern over police misconduct and violence has grown over the last decade, scholars have increasingly looked to data to better understand the complex issues at play and to examine potential reforms. Many Law School professors are leaders in this work—fighting for access to records, explaining the limitations of policing data, and using empirical research to explore patterns in police behavior, complaints, discipline, intervention, and much more.

To better understand why transparent data are essential as well as what they can, and can’t, tell us about policing, Claire Stamler-Goody spoke with four members of the Law School faculty who are engaged in empirical research on issues related to policing. Clinical Professor Craig Futterman, the director of the Law School’s Civil Rights and Police Accountability Clinic, discussed the effort to make Chicago Police Department misconduct and complaint records public, as well as the patterns he and his students unearthed once they had the data. Stamler-Goody spoke with Professor John Rappaport about two of his recent empirical studies—one on officers who land new jobs after being fired for misconduct, and another that compared insurance liability claims data with lawsuit and payout data to determine whether police behavior is getting worse. Professor from Practice Sharon Fairley discussed her comprehensive study of civilian police oversight agencies in the 100 largest US cities, work that included assembling the data set from scratch. And Professor Sonja Starr, who has used quantitative analysis in her scholarship for years, explained the normative and empirical challenges of using data to explain racial disparities in policing. The following conversations have been edited for length and clarity.

Fighting for Open Records

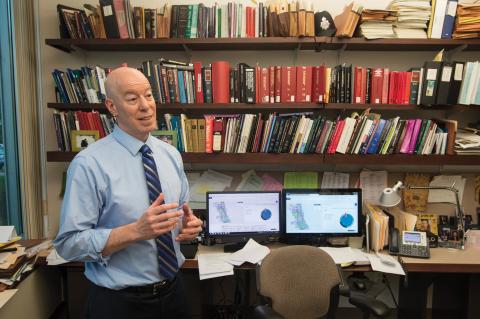

Craig B. Futterman

Clinical Professor of Law, Director of the Civil Rights and Police Accountability Clinic

Stamler-Goody: Beginning in 2007, you engaged in a legal battle with the City of Chicago to release complaint records against the Chicago Police Department—and, in 2014, the Illinois Appellate Court issued an opinion making police misconduct records public across the state of Illinois. Why did you make it a priority to make these records available to the public?

Craig Futterman: All of our advocacy and research begins with the lived experiences of people who have been most impacted by police abuse. Through our work with a woman who lived in public housing who was repeatedly sexually abused by a group of police officers, we obtained data that showed that the officers who assaulted her had been engaged in a pattern of abuse of Black people in public housing, and that the odds that they would be disciplined for abusing a Black person were less than one in a thousand. But we obtained that data under a strict confidentiality order, so we were not allowed to share that information with our clients and their neighbors in Chicago public housing who had long complained about the very patterns of abuse and state of impunity confirmed by police data.

So, we made a request for official government data on Chicago Police Department misconduct complaints that was being kept secret—secrecy that was being used to deny the reality of the police impunity and dismiss the lived experience and knowledge of people on the ground as too “anecdotal,” as though their experiences do not count as real data. Not surprisingly, the mayor and police department fought us tooth and nail. We then engaged in a long, drawn-out battle to establish the legal principle that police misconduct complaint records belong to the public. And after establishing the principle as a matter of Illinois law, we then sought to make that information and data accessible to everyone, from people who were working on the ground, to policy analysts, to investigative journalists, to people in prison, to academics, other scholars, and researchers.

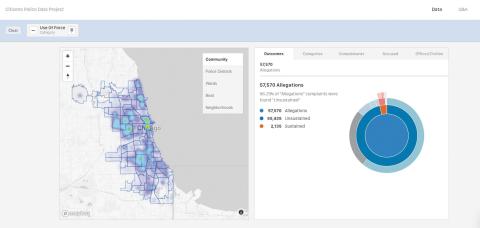

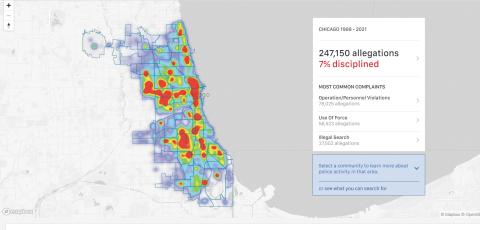

Stamler-Goody: Once you had the data, what did you uncover?

Futterman: Police abuse is a highly patterned phenomenon. We found that a small percentage of the police department is responsible for the lion’s share of abuse. We learned that they work in groups, which led to the identification of corrupt units and groups of officers within the police department.

We were also able to highlight how much the social status of the victim matters, both in terms of who is abused and who is believed. Even though Black people are far less likely than Whites to bring official misconduct complaints when abused by police, they are still 10 times more likely to be identified as victims in police misconduct complaints than Whites. When White folks brought complaints against police for misconduct, their complaints were many times more likely to be sustained than Black folks’ complaints. And we saw differences by race looking at police officers themselves—Black officers were as many as two to three times more likely to be disciplined than White officers for similar charges.

“We were also able to highlight how much the social status of the victim matters, both in terms of who is abused and who is believed.”

Craig Futterman

We’ve also done some really interesting mapping of the data that supports findings of police abuse being a contagious phenomenon. It’s not just groups of officers engaging in abuse. Those who work with corrupt officers often end up covering up abuse—officers exposed to abuse are more likely to engage in abuse in their own careers.

We’ve also used the data to gauge the effectiveness of early intervention programs. After intervention, are we seeing a difference in behavior? Are abuse complaints going down or up following an intervention? Are officers who have accumulated the most abuse complaints in a jurisdiction flagged for potential intervention by these early identification systems? Does the City of Chicago use this information to investigate and fire officers engaged in patterns of abuse? A part of what is so appalling has been the willful blindness to obvious patterns of abuse. There really have been so many different ways in which these data have been used to produce knowledge. Scholars, journalists, and policymakers regularly rely upon our data for their work. People falsely arrested or wrongfully convicted have used the data to win their freedom. Individuals abused by police have used the data to prove civil rights violations. The US Department of Justice used the data to establish a pattern and practice of civil rights violations in Chicago.

Stamler-Goody: In June 2020, you won a case before the Illinois Supreme Court that saved thousands of Chicago Police Department misconduct records from being destroyed. The case involved a contract with the police union requiring the destruction of misconduct records after five years. You fought that rule, and the court agreed. What happens when records aren’t only hidden from the public, but destroyed entirely?

Futterman: Gone means gone. It is erasing knowledge and erasing lived stories of abuse and torture. There are people who were literally tortured by Chicago police officers who had been engaging in a pattern [of this kind of abuse]. Some of those people who [falsely confessed after being tortured] remain wrongly incarcerated in prison. The destruction of these records mean they would be denied the very evidence they need to become free.

Stamler-Goody: What still needs to be done?

Futterman: It is beyond shameful that we as a nation don’t require certain basic data to be kept and reported. Today, there still is no official way of answering the question, “How many people have been killed in the United States by police this year?”

The data set that we developed here in Chicago needs to be replicated elsewhere. That same data on complaints of police abuse should exist publicly everywhere throughout the nation. And not just for internal analysis within a jurisdiction, but for comparative analyses across jurisdictions. Being unable to look at these problems on a national scale has limited our imagination of the remedies that are needed, and has cabined those remedies. I think doing nationally what we’ve begun to do here in Chicago, with this remarkable archive of human stories of abuse, would be transformative—the democratization of public data that has been guarded by police translates to a fundamental redistribution of power from the state and to the people. And the knowledge and ideas generated by this revelation can be the key to unlocking a more fair a more fair and just system than we presently have.

Examining Police Behavior

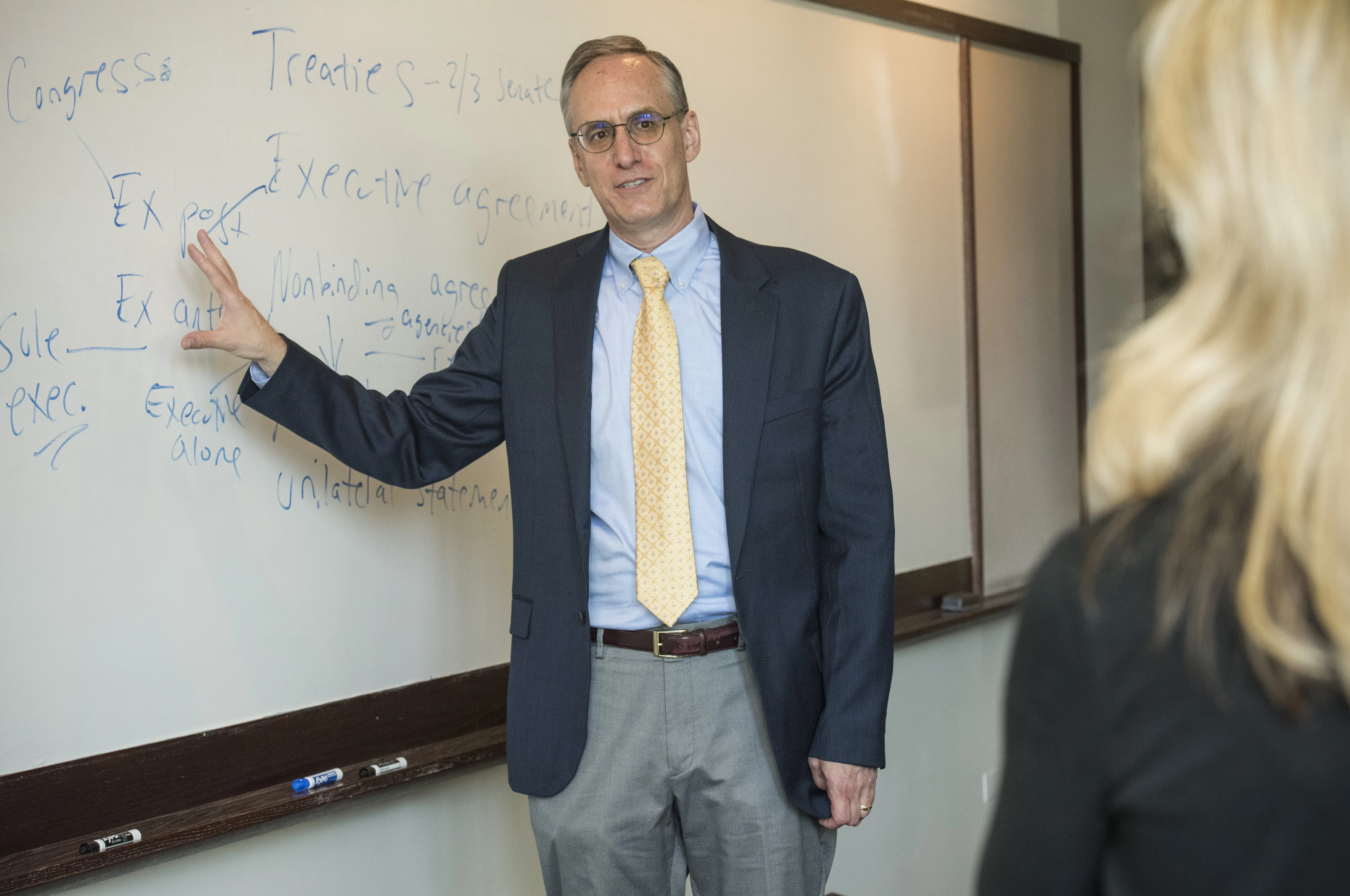

John Rappaport

Professor of Law, Ludwig and Hilde Wolf Research Scholar

Stamler-Goody: You have produced a number of papers in recent years that used empirical analysis to examine policing and reform. What drew you to this work?

John Rappaport: I had a sense that, collectively, we were putting the cart before the horse, proposing lots of changes to policing without a complete understanding of what was causing the problems. So I became really interested in that question—why do police behave the way they do? And that’s a question that has obvious policy relevance, because once you figure out why they behave the way they do, then you have a shot at changing their behavior.

Stamler-Goody: You recently published a paper in which you studied police behavior using liability insurance claims data and then compared that data with litigation and payout data. What can different types of data tell us about policing, and how do you go about choosing a particular set to study?

Rappaport: The type of data matters a lot, so much that you could reach 180-degree-wrong conclusions if you study it using one data set instead of another. It’s very common, for instance, to use civilian complaints to talk about how police are behaving, but it’s important to remember that when you’re looking at civilian complaints, you’re capturing both the behavior of the police and the behavior of the civilians who file the complaints. You could see vastly different rates of complaints in two different neighborhoods where police are behaving the same but the relationship with the community is different, for example.

Insurance claims data allowed me to study issues that are otherwise difficult to perceive—it captures a much larger set of events than civil litigation and payout data do. Among other things, if you look at payouts or lawsuits, it looks like everything is getting worse over time, but when you look at claims data, you see something very different.

Stamler-Goody: You found that while payouts and lawsuits have been on the rise, liability insurance claims have stayed the same or perhaps even declined. Was this surprising?

Rappaport: I wasn’t actually shocked to see that the number of claims is decreasing over time. The general trends in policing in the United States have been to professionalize the workforce, to diversify it across gender and racial and ethnic lines, and to step up the level of training. That does not mean that every agency is doing a good job with these things, or even that every agency is trying, but it’s inching in a positive direction overall. I think insurers are trying to improve behavior, and political leadership is too. They struggle, they take steps backwards, but it’s the direction in which people are trying to go.

Stamler-Goody: So why are lawsuits and payouts going up?

Rappaport: What my coauthor and I conclude is that public attitudes are changing. People are becoming more confident about standing up for themselves and challenging the police. Juries are becoming more comfortable discrediting police testimony and imposing liability. That’s what you see reflected in the payout figures.

“The type of data matters a lot, so much that you could reach 180-degree-wrong conclusions if you study it using one data set instead of another.”

John Rappaport

Stamler-Goody: You also recently conducted an expansive Florida-based study on wandering officers—police officers who have been fired for misconduct and then find work at another agency. Why did you study these officers in particular?

Rappaport: I was coming across lots of newspaper articles about wandering officers. People would say, “This is outrageous, these cops get fired, get hired somewhere else, and then go on to hurt more people.” And it did strike me as outrageous. But there are also countless stories about cops who hurt people and hadn’t been fired before. I wanted to try to figure out if wandering officers actually behave differently from officers who hadn’t been fired.

Stamler-Goody: What did you learn about wandering officers?

Rappaport: First, wandering officers are pretty common. At any given time, an average of just under 1,100 officers in the state of Florida were wandering officers, which seems high to me, especially when you consider how many interactions they might have with the public in a given year.

My coauthor and I were also able to identify certain patterns in their movement. The general trend is that officers are moving from bigger, better-resourced agencies to smaller, more resource-strapped ones. Most people want to work in bigger cities. They’re seen as desirable places to live, the salaries might be higher. And when you get fired from one of those jobs, you have to move outward to smaller, poorer agencies that pay less. And they’re probably willing to hire you because they don’t get as many applicants.

The third major finding is that wandering officers do behave worse on several measures. They are about twice as likely to get fired as other officers. And they’re about twice as likely to incur what Florida calls moral character violations—which are essentially serious complaints of misconduct.

Stamler-Goody: What are the biggest challenges to finding and gathering data on police behavior?

Rappaport: It’s very difficult to get good data on the things we care about a lot. For instance, pretty much every paper that talks about police use of force relies on data self-reported by the police themselves. We certainly shouldn’t take this data at face value. And then there’s the fact that policing is incredibly decentralized in the United States. You’ve got 18,000 law enforcement agencies that all decide for themselves how to collect the data. So you’re often not comparing apples and apples because different agencies are collecting different bits of information and defining their terms differently. Also, dealing with 50 different states means 50 different public records laws. Florida has very good public records laws—that’s why there are so many law enforcement papers written about Florida. We talk about the extent to which what happens in one state can and cannot be generalized. One state’s data may tell us something about policing elsewhere, but it doesn’t tell us everything.

Stamler-Goody: You recently joined the National Police Early Intervention and Outcomes Research Consortium. This group will use police operations data from Benchmark Analytics, a provider of research-based police force management and early intervention analytics software, to identify factors that lead to police misconduct. Tell me more about this work and what you hope will come of it.

Rappaport: Benchmark Analytics is integrating data from nearly every aspect of police operations—civilian complaints, training, and much more—and then leveraging it to learn things about officer behavior. And they’re making that data available to a small group of researchers. It’s going to cover a wide variety of agencies in terms of geography and size. It links together different kinds of data in a way that has either never been done or is extremely time-consuming to do. The hope is that we’ll be able to make progress on lot of questions that have been hampered by data-access problems.

The long-term goal of the consortium is to try to learn more about what interventions work in improving police behavior. We’re already getting pretty good at predicting which officers are likely to have problems, but what we don’t know is what to do next. We’re trying to learn more about what interventions, if any, might work to get high-risk officers back on track.

A Comprehensive Study of Civilian Oversight

Sharon R. Fairley

Professor from Practice

Stamler-Goody: In 2019, you set out to study civilian police oversight in hopes of seeing how Chicago’s experience fit the trends in other cities. Because there wasn’t a single source of information, you combed through information from state statutes, municipal ordinances, entity websites, and local news articles and ultimately created your own data set. The result was a comprehensive study of civilian oversight agencies in the 100 largest US cities. What did you find?

Sharon Fairley: The biggest takeaway for me: it really isn’t enough to have just one form of oversight. You need to create a multitiered system to have the greatest impact on policing. Larger cities, which tend to have more experience with civilian oversight, often have two or three forms of oversight—maybe an investigative form plus a review form plus an advisory piece. Whereas in smaller cities, they usually start with just one form of oversight, typically the review model, where the oversight agency reviews the investigative work that’s done by the police department itself. Legislators creating these systems are beginning to stress that the multifaceted approach is necessary in order for civilian oversight to be truly effective.

“A community needs to see how oversight entities go about their work. Without this kind of transparency, citizens often are hesitant to trust their decision-making.”

Sharon Fairley

Stamler-Goody: You also recently updated your survey data to learn about how cities were incorporating civilian oversight into police reform strategies adopted in the wake of the killing of George Floyd. What observations can you share from the most recent data you collected?

Fairley: It has been really interesting. Civilian oversight has developed quite dramatically since the summer of 2020. The activism that played out in city streets has definitely translated into political action. Dozens of cities across the country—both large and small—have built new or improved civilian oversight entities. At this point, almost three-quarters of the top 100 US cities by population has some form of civilian oversight. The trend among larger cities building multitiered systems continues. But we also see smaller cities starting out by creating two entities, such as a review board combined with an agency that monitors police department investigations and operations.

Stamler-Goody: Why is data transparency—by police and by the civilian agency itself—so essential to accountability efforts?

Fairley: For a civilian oversight entity to be effective, it needs access to the information about how the department is operating. Sometimes those mechanisms for access are actually baked into the ordinance that creates the agency. If a police department isn’t transparent about, for example, their use of force, it’s really hard to understand what is really going on in the field with police-citizen encounters and make appropriate policy and training recommendations. Another essential ingredient is transparency by the civilian oversight entities themselves. A community needs to see how oversight entities go about their work. Without this kind of transparency, citizens often are hesitant to trust their decision-making. The whole purpose of civilian oversight is to engender trust and legitimacy in the police accountability infrastructure.

A big challenge is that there are sometimes impediments written in the law—in collective bargaining agreements and also in some state statutes—that prevent oversight agencies from making this information publicly available. That’s part of the growth process for civilian oversight—to figure out how we can clear away some of those roadblocks to transparency as the movement starts to mature.

Stamler-Goody: What else are you studying in this area?

Fairley: In the summer of 2020, I began researching oversight in the county-based law enforcement context and found that civilian oversight has been adopted more slowly by those agencies. I think part of the reason is that most county-based law enforcement agencies, unlike municipal police departments, are led by a sheriff, which is an elected position. And sheriffs may claim they don’t need civilian oversight because they have the voting process. Also, many county-based law enforcement offices run jails in addition to providing policing services. We’ve seen many use-of-force incidents, and a neglect in handling those incidents, which has caused community concern and a demand for more civilian oversight in this context.

I’m also working on a piece about how civilian oversight entities can ensure that police abuse complainants have a voice in the disciplinary process. One of the things we see in most police disciplinary systems is that victims of police misconduct don’t have much of a role in the disciplinary process. This is very different from the criminal justice context where, by law, victims often have very specific rights to be involved in the process. They have the right to speak at a plea hearing or the right to provide an impact statement. But that’s not really true in the context of police disciplinary matters. I’d like to see that change.

Exploring the Limitations of Policing Data

Sonja B. Starr

Julius Kreeger Professor of Law and Criminology

Stamler-Goody: You have spent years using empirical analysis to study racial disparity, and you recently wrote a paper examining the challenges of explaining race gaps in policing. Tell me more about this work and its genesis.

Sonja Starr: My interest in policing really stemmed from my prior research on disparities elsewhere in the criminal justice process, such as sentencing, charging, and plea bargaining. You can analyze disparities as cases move through the criminal justice process, but you have to have a baseline for deciding which cases are comparable to one another. So in that prior research, we looked at people who had been arrested for the same offenses and then tracked what happened to them as they went through the process. But that data didn’t tell us anything about the policing decisions that got people into that arrestee pool to begin with, and determined what offenses they would be arrested for. When you turn to analyze policing disparities, however, it’s a lot harder, because the relevant pool isn’t just the people already in the criminal justice system, it’s all people who are potentially subject to policing—which is to say, all people. From an empirical research perspective, that’s a big problem, because criminal justice data sets don’t include all people, they only include people who the system has collected data on, mainly arrestees or, in some jurisdictions, people who are stopped by police.

Meanwhile, when I wrote that paper [on explaining race gaps in policing], the widespread public attention being given to the Black Lives Matter movement was rising, and I would see all of these conflicting empirical claims being made. If Black Lives Matter advocates pointed to the large disparities in the rates at which different people were stopped by police, their opponents might say the difference could be explained by criminal conduct. And the data didn’t really provide good tools for pushing back at that. We haven’t had great ways of conclusively showing that people who are behaving in the same way are being treated differently based on their race. And that’s because nobody collects systematic data covering all criminal behavior.

Stamler-Goody: You argue that criminal conduct has played an exaggerated role in the research on racial disparities in policing. How so—and why is it an issue?

Starr: The most common thing that police and defenders of police say in the public debate is that differences in crime rates explain policing disparities. But first, the data they point to are very often based on rates of being arrested for crimes—and an arrest is a policing decision that itself might be the product of disparity. So if you argue that race gaps occur because of crime differences, and the evidence of crime differences you point to is, for example, that Black people are four times as likely to be arrested for robbery—that’s a very circular argument.

“We haven’t had great ways of conclusively showing that people who are behaving in the same way are being treated differently based on their race. And that’s because nobody collects systematic data covering all criminal behavior.”

Sonja Starr

There are also some specific points I made in that paper about the way that people lie with statistics and crime data. For example, when people argue that stop rates or arrest rates should be proportional to crime rates (rather than to the demographics of the population as a whole), they are implicitly assuming that everybody who gets stopped or arrested by the police is in fact guilty of a crime. But that is very far from being true. In New York City, for example, those researching stop-and-frisk practices found that Black and Brown people, especially Black people, were vastly more likely to be stopped by the police. And defenders of the NYPD claimed it was because of crime differences. But if you looked at the stop-and-frisk outcome data that police themselves collected, virtually nobody that they stopped and frisked had any contraband on them, much less anything dangerous like a weapon. The vast majority of people they stopped were completely innocent.

So if you have a program that mainly stops people who are doing nothing wrong, then pointing to crime rates to try to justify those disparities is really beside the point. You’re still overwhelmingly burdening innocent people of some racial groups much more than others.

Stamler-Goody: You suggest a technique for gathering data on disparate policing called auditing, in which pairs of people of different races test whether police respond differently to very similar behavior. How would this work, and what might it tell us?

Starr: Lots of people, including me, have used auditing to analyze racial disparities in other areas. In the employment context, I did a study with 15,000 fictitious online job applications that were otherwise identical but varied based on race. So I wrote a paper exploring whether it would be possible to do something like that in the context of policing, where you basically have two people act the same way and see if the police treat them differently. The obvious challenge is that it sounds dangerous, but I think there could be some limited contexts in which it would be relatively safe. You could imagine people engaging in something very minor, such as loitering, and seeing if they get stopped. I think a setup like that would be pretty low risk—but not zero risk. Whenever you get police officers carrying guns involved, you don’t have complete control over the environment.

The best way to accomplish it might be to have police engage in a self-testing program, where they would use undercover officers as the testers. I think that would be more defensible, partly because police officers always take on some risk in their roles. They could have extensive training, and they could also have backup available just out of sight. Police departments already have engaged in similar self-testing programs in other contexts, like anticorruption enforcement and “red teaming” exercises for counterterrorism training. I think that could be a way to get some really useful information. To be clear, though, the information would only address a very specific type of discrimination: police officers treating people who are engaged in identical conduct in the same neighborhoods differently based on their race. It wouldn’t address more systematic, structural sources of discrimination, including the way police are allocated across neighborhoods.

Stamler-Goody: What advice would you give to consumers wishing to better understand research on race and policing?

Starr: First of all, be skeptical. As human beings we tend to be drawn to numbers that support what we think to be true. Consider the fact that, as I mentioned, data that comes from the criminal justice system is already shaped by the discretionary decisions of criminal justice actors. When people talk about differences in crime, realize that this is an area in which we don’t have good data because we can’t collect data on all crime, we can only collect data on reported crime.

Also, ask yourself, is the data being presented normatively important, or is it more of a non sequitur? Looking at the stop-and-frisk example—do disparities in crime rates actually matter when you still have this overwhelming problem of Black and Brown people who are innocent being stopped?